Overview

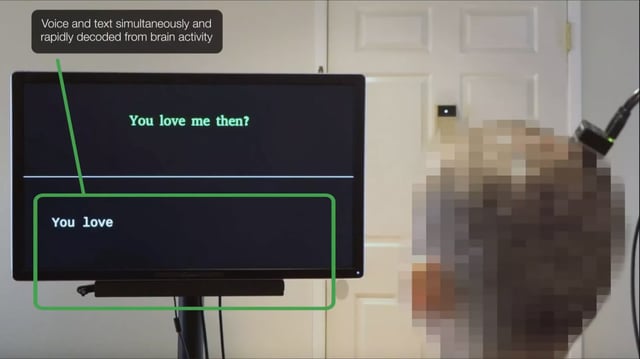

- The brain-computer interface (BCI) system uses AI to decode neural signals from the motor cortex into audible speech with less than one second of delay.

- The technology was successfully tested on Ann Johnson, a stroke survivor who lost her ability to speak 18 years ago, allowing her to communicate in her pre-injury voice.

- The system employs a high-density electrocorticography (ECoG) array to collect neural data and a personalized AI model trained on recordings of Ann's voice to produce natural-sounding speech.

- Researchers demonstrated the system's ability to generalize beyond its training data, accurately synthesizing words outside its initial vocabulary.

- Future advancements aim to enhance expressivity by incorporating tone, pitch, and loudness, with broader applications being explored, including non-invasive methods.