Overview

- Large language models such as ChatGPT and Gemini perform well on abstract terms but fall short on sensory-rich words like “flower” because they lack smell, touch and movement experiences.

- Researchers tested human and AI representations of 4,442 words using Glasgow and Lancaster Norms and found strong alignment on non-sensory concepts but major divergence when sensorimotor dimensions were involved.

- Multimodal models trained on both text and images outperformed text-only LLMs in vision-related tasks, demonstrating that visual data boosts AI’s conceptual accuracy.

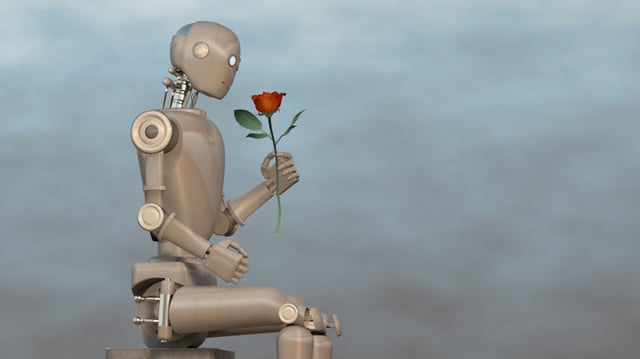

- The study’s authors suggest that adding robotic sensor inputs and embodied interaction could supply AI with the missing experiences needed for richer concept understanding.

- Disparities between human and AI concept representations may affect future AI-human communication, highlighting the need to ground language models in multisensory data.