Overview

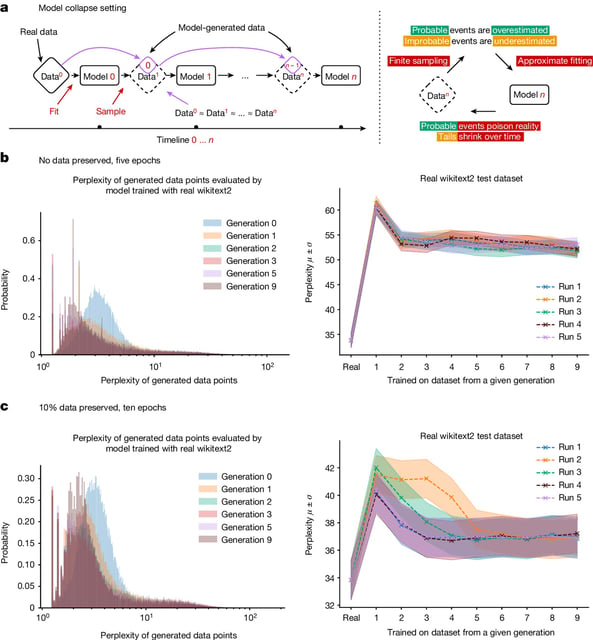

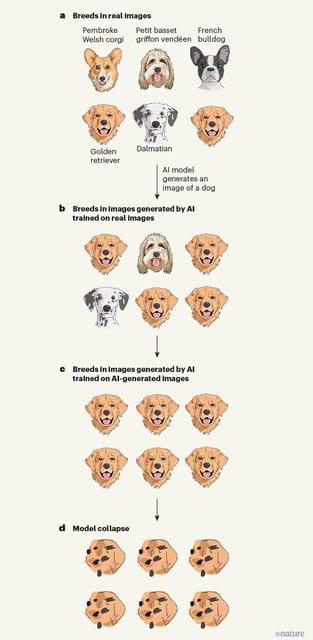

- Studies show that AI models trained on data produced by other AI models suffer from 'model collapse,' losing the ability to generate accurate outputs.

- The phenomenon is due to AI models increasingly relying on synthetic data, which lacks the diversity and quality of human-generated content.

- Model collapse results in AI systems producing homogeneous and often nonsensical outputs over successive generations.

- Experts suggest that preserving access to original, human-generated data is crucial to prevent this degradation.

- Proposed solutions include better data curation, filtering AI-generated content, and implementing watermarking techniques to distinguish synthetic data.