Overview

- The Ohio State University study in Nature Human Behaviour assessed how GPT-3.5, GPT-4, PaLM and Gemini map 4,442 words against human scores on the Glasgow and Lancaster Norms.

- Models lacking sensory grounding matched human conceptions of abstract terms but underperformed on words tied to touch, smell and motor activities.

- Vision-enabled LLMs trained on both text and images showed improved alignment with human ratings on visual concepts.

- Researchers caution that persistent sensory gaps in AI understanding may lead to miscommunication and limit real-world applicability.

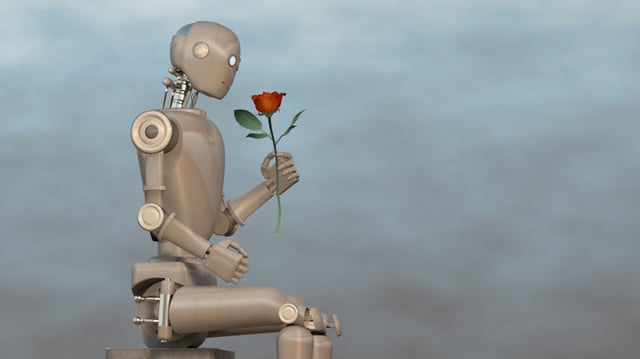

- The authors suggest integrating sensorimotor data and robotics into future models to enrich AI’s representation of human concepts.