Overview

- The study published in Nature Human Behaviour today compared how humans and LLMs represented 4,442 concepts using Glasgow and Lancaster norms.

- LLMs from OpenAI (GPT-3.5, GPT-4) and Google (PaLM, Gemini) matched human judgments on abstract words but diverged on terms linked to smell, touch and other senses.

- Models trained on both text and images showed stronger alignment with human vision-related concepts than text-only counterparts.

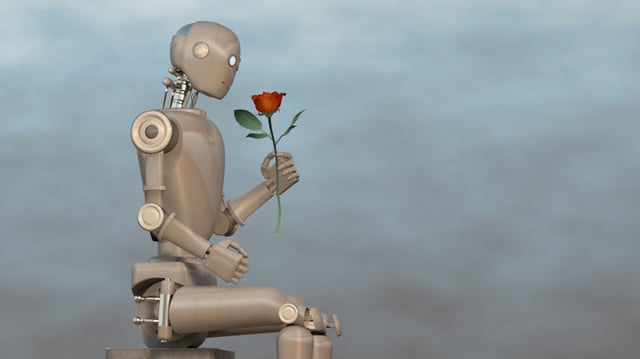

- Researchers attribute AI’s sensory blind spots to training without real-world motor or sensory experiences.

- The team proposes integrating sensor data and robotics into future models to give AI more human-like conceptual grounding.