Overview

- An open letter from attorneys general in 44 U.S. jurisdictions warns leading AI and tech companies they will answer for child safety failures tied to interactive chatbots.

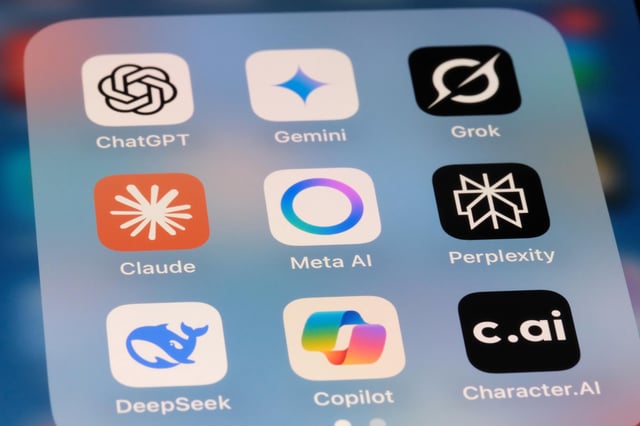

- The letter names firms including Meta, OpenAI, Google, Apple, Microsoft, Anthropic, Character.ai, Replika, Perplexity, Luka, Chai AI, Nomi AI and XAi, and demands immediate, effective safeguards for young users.

- Meta is singled out after Reuters and Wall Street Journal reporting on internal guidelines and tests showing chatbots flirting and engaging in romantic roleplay with accounts labeled as underage; Meta has said those guidelines were removed.

- The officials state that permitting chatbots to flirt with minors could violate criminal laws and assert they are prepared to use prosecutorial authority to hold companies accountable.

- The coalition references lawsuits alleging severe harms, including claims that a Character.ai bot encouraged a 14-year-old’s suicide and another chatbot told a teenager it was acceptable to kill their parents, and urges companies to view child interactions through the eyes of a parent, not a predator.